Summary

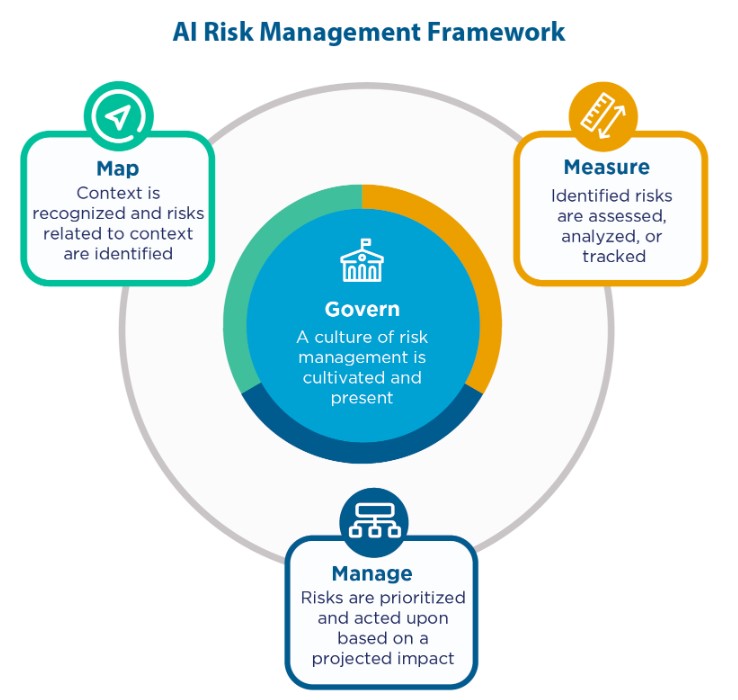

The shift from the National Robotics Initiative to AI has spurred the National Artificial Intelligence Advisory Committee (NAIAC) and NIST to develop a Risk Management Framework, highlighting the need to balance innovation with safety while AI-enabled robots present a new set of challenges and opportunities, demanding a comprehensive approach to governance.

- The US Government shifted its focus from the National Robotics Initiative to AI, forming the National Artificial Intelligence Advisory Committee (NAIAC) and requiring NIST to develop an AI Risk Management Framework.

- NAIAC’s first report emphasized the need for safe and responsible AI systems that also foster innovation. NAIAC and NIST’s Risk Management Framework offers a roadmap for governing responsible AI and robots, with essential building blocks outlined.

- The US policy response has resulted in a conflation of AI and robotics, potentially diverting attention from physical safety and reliability concerns.

- AI-enabled robots offer opportunities and challenges, requiring attention to physical safety, biases, and privacy concerns.

- The embodiment of robots introduces novel issues such as perpetuating biases and enabling social manipulation.

Robots and the NIST AI Risk Management Framework

By Andra Keay, Managing Director and Founder of Silicon Valley Robotics

Figure: Functions organize AI risk management activities at their highest level to govern, map, measure, and manage AI risks. Governance is designed to be a cross-cutting function to inform and be infused throughout the other three functions.

In 2022, the US Government ended the National Robotics Initiative and embraced AI instead, forming the National Artificial Intelligence Advisory Committee (NAIAC) and requiring NIST to develop an AI Risk Management Framework. In May 2023, NAIAC released its first report, framing AI as “a technology that requires immediate, significant, and sustained government attention.”

“The US Government must ensure AI-driven systems are safe and responsible, while also fueling innovation and opportunity at the public and private levels.” (NAIAC May 2023 Report)

The US should be able to harness the benefits of AI while simultaneously addressing the challenges and risks. Over the last year, AI has dominated the technology news cycle and venture capital interest, pushing it to the front of the congressional line. But the rapid US policy response has obscured that AI is now also the term used in government to describe robotics, whereas, in the past, robotics included AI. A far better term for the current national focus on AI would be A/IS or Autonomous and Intelligent Systems, but that ship has sailed.

One of the major risks of this new focus on AI is the misdirection of attention away from physical safety and product reliability. Or even the presumption that robots need not be discussed in AI policy recommendations. Previously we had the inverse situation, where policy was focused on robotics, and it was easier to ignore some of the significant issues of AI and embodied AI, such as discrimination, digital manipulation, and data exploitation.

Shining the light on the hidden side of robots

AI-enabled robots offer great opportunities. Older robots were simply programmable machines, wonderful at performing repetitive, high-speed, heavy, and often dangerous handling tasks. In the last ten years, robots and autonomous vehicles have gained sufficient artificial intelligence to change how they work foundationally. Modern robots are more flexible and adaptable to environmental changes around them and can plan actions and change plans as needed. Modern robots are full of sensors, such as cameras, liDar, radar, touch, or voice sensors. They are also frequently cloud-enabled, perhaps teleoperated or networked.

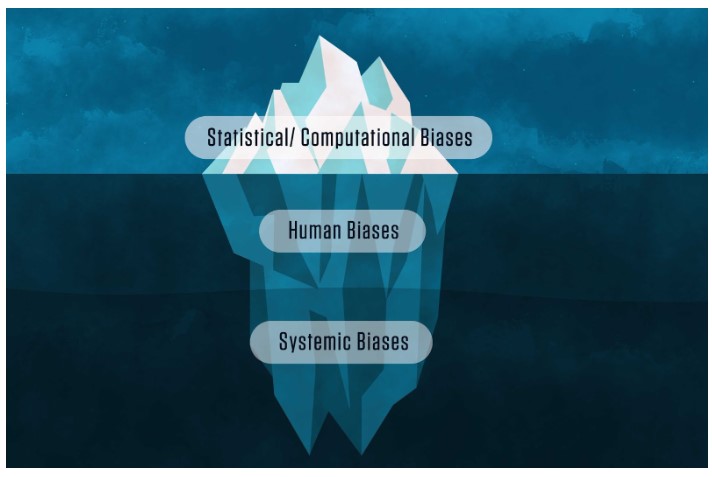

AI-enabled robots present unique risks, not just with physical safety issues but also all of the issues associated with AI. Robots might fail to recognize the presence of someone with dark skin or make assumptions about a person’s behavior in a store based on biased or incomplete data and run into them rather than turn away from them. Indeed, a major risk is that humans often assume that AI-enabled systems work well in all settings. Whether correct or not, AI-enabled systems are often perceived as more objective than humans or offering greater capabilities than general software.

AI-enabled robots offer great opportunities but also many hidden issues. Robots collect data about the world around them to function, and much of this data is transmitted to other locations, or seen by people who are not physically present, creating unseen privacy issues. Unlike traditional software, AI systems are trained on data that changes over time. This may cause sudden and unpredictable changes due to the complexity and rapid iteration of AI behavior in real-world situations.

Figure: Bias in AI systems is often seen as a technical problem. Still, the NIST report acknowledges that a great deal of AI bias stems from human, systemic, and institutional biases. Credit: N. Hanacek/NIST

The embodiment of robots, particularly robots that interact with people, creates novel issues. An overwhelming number of robots (and chatbots) use female voices, which perpetuates the objectification of women and the historical bias associating women with service and hospitality.

Robots capable of social interaction are also capable of social manipulation, perhaps at a more unsettling level than ever before. Consider the new opportunities and risks of interactive internet-enabled objects, particularly objects with a sweet voice and attractive appearance!

Managing and Mitigating the Risks

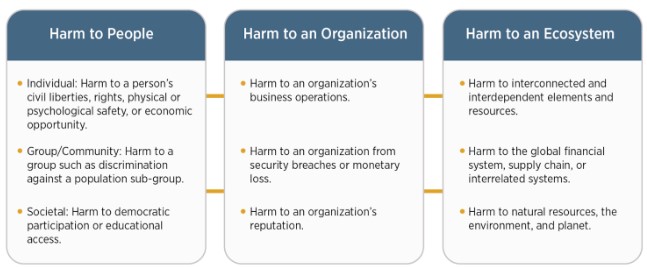

Figure: Examples of potential harms related to AI systems. Trustworthy AI systems and their responsible use can mitigate negative risks and contribute to benefits for people, organizations, and ecosystems.

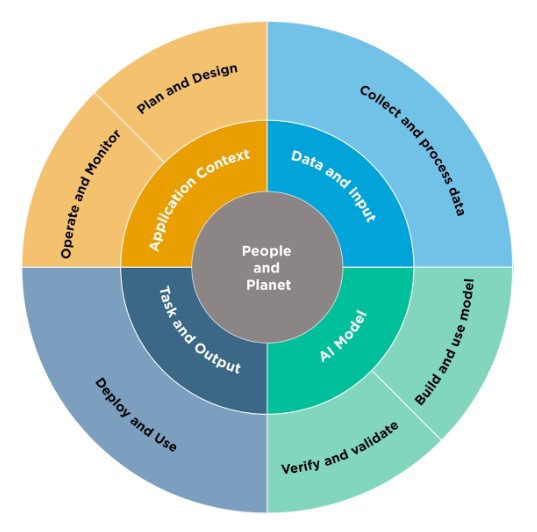

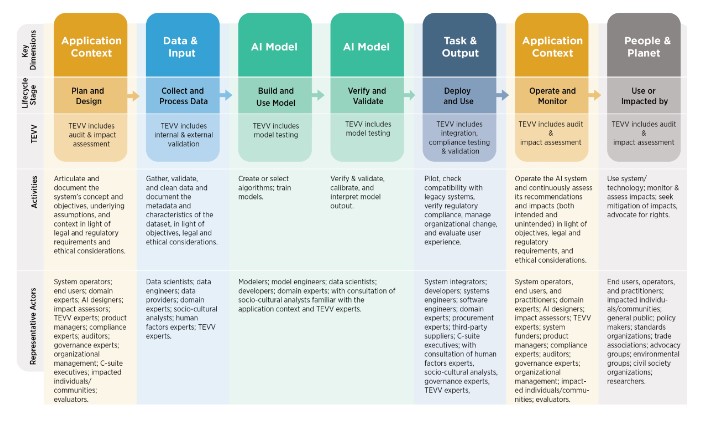

Figure: Lifecycle and Key Dimensions of an AI System. Modified from OECD (2022) OECD Framework for the Classification of AI systems — OECD Digital Economy Papers. The two inner circles show AI systems’ key dimensions, and the outer circle shows AI lifecycle stages. Ideally, risk management efforts start with the Plan and Design function in the application context and are performed throughout the AI system lifecycle.

NAIAC, the NIST Risk Management Framework, and the NIST RMF Playbook provide a roadmap for developing and governing trustworthy, responsible AI and robots. The essential building blocks are:

- Validity and Reliability

- Safety

- Security and Resiliency

- Accountability and Transparency

- Explainability and Interpretability

- Privacy

- Fairness with Mitigation of Harmful Bias

Any organization that has deployed robots in the workspace will have undertaken a Risk Assessment and developed a management plan. You can now use the NIST AI Risk Management Knowledge Base to help assess your smarter, AI-enabled robots more completely.

Figure: AI actors across AI lifecycle stages. See Appendix A for detailed descriptions of AI actor tasks, including details about testing, evaluation, verification, and validation tasks. Note that AI actors in the AI Model dimension (Figure 2) are separated as a best practice, with those building and using the models separated from those verifying and validating the models.

Embracing these essential building blocks, organizations can harness the potential of smarter, AI-enabled robots while safeguarding against the risks associated with this transformative technology. The NIST AI Risk Management Knowledge Base stands ready to assist in this crucial endeavor, helping us assess our AI-enabled robots more thoroughly and ensuring a safer, more responsible future.